Can AI Really Track Your Nutrition?

AI is everywhere, also in nutrition tracking. Lots of apps, including Nutriely, use AI to enhance their features. Some applications go as far as labeling themselves as dedicated AI Nutrition tools. For the average consumer it may sound awesome. But with all this hype inevitably comes a lot of confusion and misguided information about how AI could be used for nutrition tracking.

Where is the problem?

In short, AI should not be relied upon for any specific nutrition advice or information.

In case you are confused on what exactly is AI (ChatGPT or Claude or Gemini) - these are all large language models (LLM). An LLM is a type of AI model designed to understand and generate human-like text. It is trained on vast amounts of text data (like all of internet) to process and predict language patterns. It goes through several complex phases of learning to go from a simple token predictor to a helpful assistant you can find in most applications.

At first glance it appears to have a lot of knowledge about almost any topic you can think of. It truly is amazing how much it can tell you about the world. However you have to remember that under the hood, under the pre-trained responses the information is just a memory, a vague recollection of its training data. The training data is no longer there, it does not pull from a source directly. Instead the model has trained statistical patterns and is able to predict the next token that largely resembles the training expectations.

Due to various limitations models are trained with tokens (instead of characters). And because the model is working with tokens it is terrible at many tasks that have to do with character level understanding.

If we are going to track nutrition with LLM - we need to get the data about foods and we need it to be able to calculate it for us. Let’s look at some of the problems we will come across when doing so.

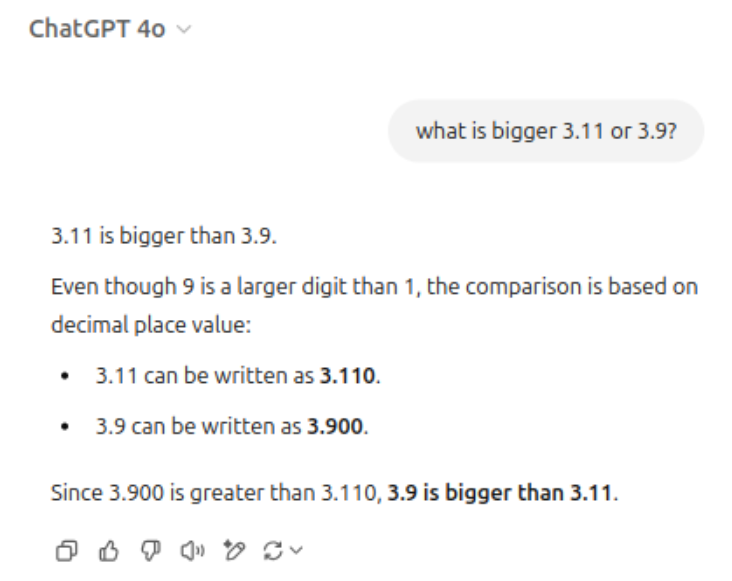

To calculate we will need to perform some basic math. But in many ways, simple math is a big problem for LLMs. Just try asking the model - what is bigger 3.11 or 3.9?

*Example from GPT-4o 18.02.2025. Has since been fixed. But similar logic holes will always re-appear.

*Example from GPT-4o 18.02.2025. Has since been fixed. But similar logic holes will always re-appear.

As you can see the model gets the answer wrong at first, then continues to reason through and in the end contradicts it self by arriving to the right answer. What is more, the model is not consistent with its responses. Sometimes it will get to the right answer, and sometimes not. The model is not actually calculating anything, it is just trying to predict what comes next. The next token predictions will almost always vary as there is sort of a chance factor when choosing the next token. In this particular example the confusion likely comes from the fact that in the training data there is a huge amount of cases where 3.11 is actually the larger value - python versions, book chapters, etc…

The data labelers working on fine-tuning the models are working to fix such issues by training the type of responses the model should be giving - reasoning through, going step by step and reducing the amount of guessing on each next token. The models have already improved tremendously, however they still have a long way to go until their math skills could be truly reliable.

Note: There are new types of models, like GPT-o3, Deepseek-R1 that are trained using reinforcement learning techniques and are much better at math tasks - they are likely to get the above example correct. However they still have many potential issues and are susceptible to the same risks as previous models.

If you ask the AI model to track your nutrition it is likely to return something very believable, in many cases even close to accurate results. But sometimes even self explanatory things like a decimal value could completely throw the model off balance and it would return something very obviously wrong. And if this is a part of a bigger task then you are likely to not even notice as the rest of the response would seem very convincing and true.

So already we see that relying on calculations is iffy at best. But what about the data?

If you ask to get the nutrition data for specific products - most of the time the model is able to give you good approximation for most of the common foods. It is a great tool for a quick and meaningless reference.

Depending on how varied is your diet every now and then you might run into some weird numbers. Have you heard of the term - hallucinations? They happen partly because LLMs have a problem of admitting that they do not know something. Latest models are much better at this but in certain situations models are still likely to respond with made up stuff. In the context of nutrition tracking it can result in fictional nutrition values, fictional portion sizes and eventually lead to misleading dietary advice. Unless confronted and asked to back up its information you will never know when AI has started to hallucinate.

AI is also prone to exaggerated nutrition claims, sometimes even indirectly promoting something without scientific backing. Once gain models keep improving as the fine-tuning keeps giving better, expert based answers, however the memory from the internet is still there and you have to be careful with any advice that AI gives you.

To sum up, it could be that you are lucky and you get an awesome meal plan with a closely approximated nutrition data. Good for you.

But what happens when you combine iffy calculations with potentially hallucinated data?

Some call it AI Nutrition. I would not suggest relying on it for anything beyond giggles and dinner conversation.

As you can hopefully see, by design, LLMs are not all-knowing, trusted source of information to base your life around (yet).

It may sound convincing, it may seem realistic, definitively in the ballpark. Good enough, right? Until you realize something is off and decide to double check, then you can go on a rabbit chase and try to figure out what is true and what is just generated text.

It’s not all bad

Despite what it may sound like, I am one of the most pro-LLM people you will find. And so I want to leave you on a positive note.

The models like ChatGPT give you tools to enhance the accuracy of your model. More specifically there are 2 tools that can significantly help you fix some of the problems above.

Internet search

Many assistants now have the capability to search the internet when they are asked or do not know something. By using this feature you can provide a link to a verified food database and make the model fetch the data directly for more accurate results.

Code execution

When calculating things you could ask it to use its code feature (which writes python under the hood), to reliably calculate - instead of just predicting what’s likely to be the result. The model is good at writing the script to calculate, and it is good at copy-pasting the tokens to that script - by doing this we can ensure we overcome some off the math problems LLMs have.

With these 2 tools you would be getting much more trustworthy results. The next step would be to build the assistant in a way that it remembers your historical data - perhaps by using an external file where you store the LLMs outputs. Then get the LLM to read the data each time you ask for advice or further calculations. You would also need to make sure it is capable of keeping the whole context in memory, in order for it not to fallback to previous problems. You could theoretically write a series of prompts that would generate, self verify and make corrections. But unless you are developing an app, the maintenance, debugging and making it all work is likely not what you had in mind when you decided to ask AI for nutrition help.

So can you track nutrition using AI?

Depends on what exactly you mean by tracking nutrition. If you want quick meal ideas or suggestions, or just a ballpark estimate on what your eating then yes, you can simply chat with AI and get some rough, unverified information about common foods and even get it to do some calculations. But trusting it would be the same as trusting it for medical advice, I would advise against it.

Instead use it like this:

- Use AI as a secondary tool, not as a solution

- Cross-check information in verified sources

- Make AI use its tools (internet search, code) to enhance its accuracy

How Nutriely uses AI?

For now, we sprinkle in AI for various tedious tasks that language models are great at. We enhance our ingredient mapping, use it for internal translations and various formatting tasks. Those are tasks that would otherwise take a lot of time and maintenance.

Given our knowledge and understanding of AI we do not rely on AI for any nutrition related information or calculations. All of our nutrition data comes from actual nutrition databases, is verified by our team and can be traced by our data sources.

In regards to the future, many exciting features are likely to involve AI. In particular AI image recognition is a field we definitively plan getting into.

Published on: 19.02.2025 Author: Martins Laganovskis

Why should you care what I think?

I will not call myself an AI expert by any means, I know enough to realize I am far from that. But I have worked on various projects directly relying on AI, I have learned about neural networks and even built small networks myself. With this experience I am able to clearly see both the amazing use-cases as well as the enormous potential for failure.

In case you want to learn more about LLMs, here is a video to get you started.

Try Nutriely for free.

Download our app and start your free trial with all premium features included.